Arbin Integrated Apache Kafka for Data Streaming Solutions

Arbin SQL Database solutions design for structured data management and transaction processing using relational models. They store data in tables with defined schemas and support SQL for querying and managing data.

It's suitable for applications requiring complex queries, data integrity, and transactional support.

However, in the modern event-driven architectures, real-time analytics, data pipelines, and log aggregation, log processing, and integrating micro services require excels in real-time event streaming, high-throughput data pipelines, and distributed data processing such as Apache Kafka.

Arbin has integrated Apache Kafka into our latest MITS release.

Advantages

Storage

Stores data in topics, which are further divided into partitions. Kafka retains data for a configurable period, allowing for replay of events.

Real-Time Data Processing

This capability enables immediate analysis of battery performance, early error detection, and prediction of potential failure conditions. For example, Kafka Streams can be utilized to calculate real-time performance metrics, compare them against set thresholds, and trigger alerts or adjustments to the testing process if anomalies are detected.

Scalability

Arbin's Kafka integration offers excellent scalability, making it easy to expand as projects grow. When additional test equipment is added or data volume increases, Kafka's distributed architecture ensures the system can handle the larger datasets without any performance degradation.

Latency

Designed for low-latency, high-throughput event streaming. Suitable for real-time data processing with minimal delay.

Throughput

Handles high-throughput data streams efficiently, making it suitable for big data use cases.

Consistency

Provides eventual consistency in a distributed environment. Messages are durable and replicated across brokers, but consumers might not see changes instantaneously.

Architecture Overview

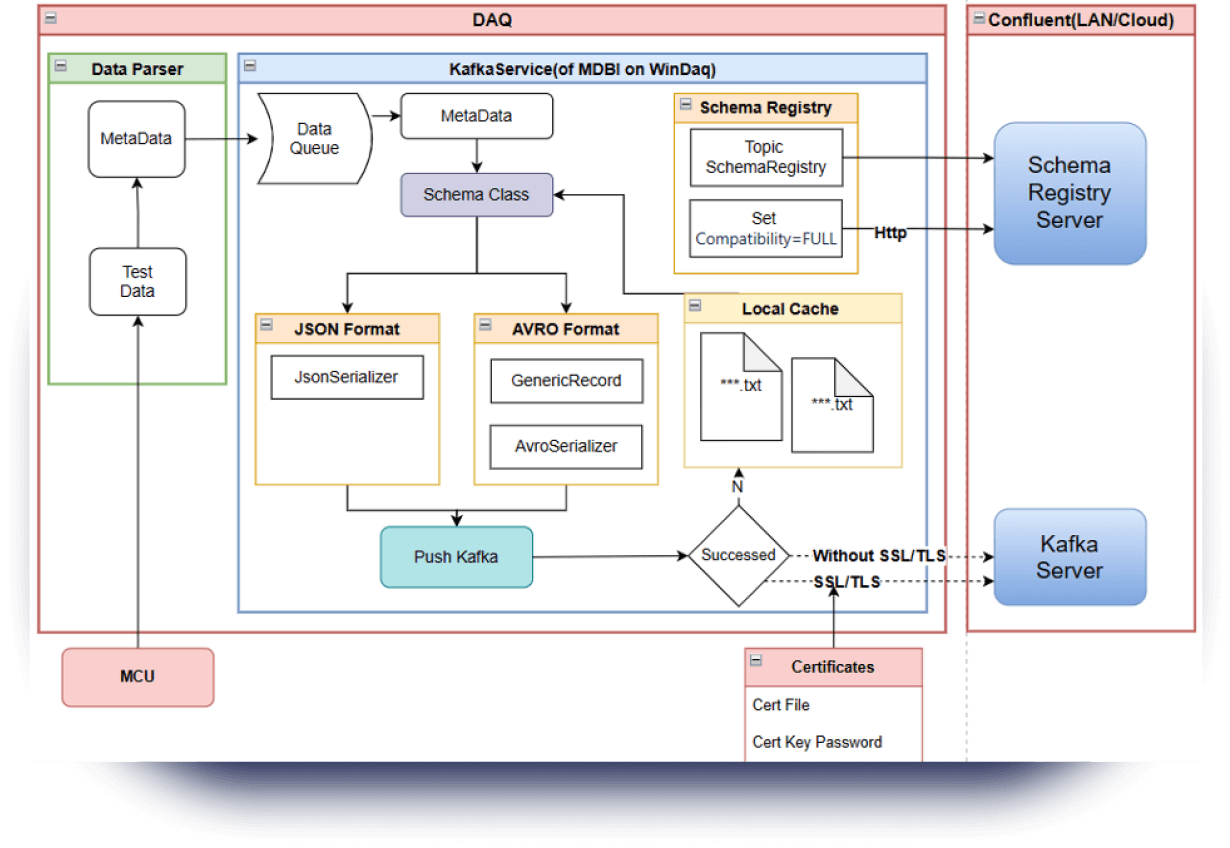

Arbin's Apacke Kafka Integration supports multiple data formats: JSON, AVRO, and Binary Format to push to Kafka Cluster and gives greater flexibility to consumer application side to choose data format.

Also, we built another layer of data protection by creating an internal In-File Cache (Local Cache) in case Kafka Cluster is not available, there is Cache File will log all the data that has been received from MCUs while the Kafka Service is offline.

Once the Kafka Service is resumed, the Cache will push to Kafka first and MITS new data logging will catch up later when Cache File is empty.

Apache Kafka on-premises

Advantages

Customization

Full control over Kafka configurations and tuning parameters. You can customize settings to meet specific

performance and operational requirements.

Infrastructure Control

Ability to control the underlying hardware and network configurations, which can be crucial for optimizing

performance and ensuring compatibility with other systems.

Data Sovereignty

Data remains within your organization's infrastructure, which is essential for compliance with data residency and

sovereignty regulations.

Security

Implement and enforce stringent security measures tailored to your organization's requirements, such as network

security, access controls, and data encryption.

Apache Kafka On Cloud Service

Cloud-based Apache Kafka services, such as Confluent Cloud, Amazon MSK (Managed Streaming for Apache Kafka), and Azure Event Hubs (Kafka endpoint), offer numerous advantages over on-premises deployments.

Advantages

Operational Overhead

Cloud Kafka services handle much of the operational burden, including setup, configuration, monitoring, patching, and upgrades. This reduces the need for in-house expertise and resources dedicated to Kafka management.

Automated Scaling

Managed services automatically scale up or down based on demand, eliminating the need for manual intervention to adjust resources.

Arbin Apache Kafka Configurations